Abstract

The human intrinsic desire to pursue knowledge, also known as curiosity, is considered essential in the process of skill acquisition. With the aid of artificial curiosity, we could equip current techniques for control, such as Reinforcement Learning, with more natural exploration capabilities. A promising approach in this respect has consisted of using Bayesian surprise on model parameters, i.e. a metric for the difference between prior and posterior beliefs, to favour exploration. In this contribution, we propose to apply Bayesian surprise in a latent space representing the agent’s current understanding of the dynamics of the system, drastically reducing the computational costs. We extensively evaluate our method by measuring the agent’s performance in terms of environment exploration, for continuous tasks, and looking at the game scores achieved, for video games. Our model is computationally cheap and compares positively with current state-of-the-art methods on several problems. We also investigate the effects caused by stochasticity in the environment, which is often a failure case for curiosity-driven agents. In this regime, the results suggest that our approach is resilient to stochastic transitions.

Visual Control Zero-shot Benchmark

This experiment evaluates the performance in a zero-shot learning setting (also see Sekar et al. 2020 for details).

The model and the agent are trained without rewards, collecting data through exploration. Some of the curiosity-driven behaviours learned in this phase with Latent Bayesian Surprise are showed in the GIF, under the Exploration column.

Along the exploration process, snapshots of the agent’s model are used to train a task policy on the final task and plot its zero-shot performance. These behaviours are shown under the Task column.

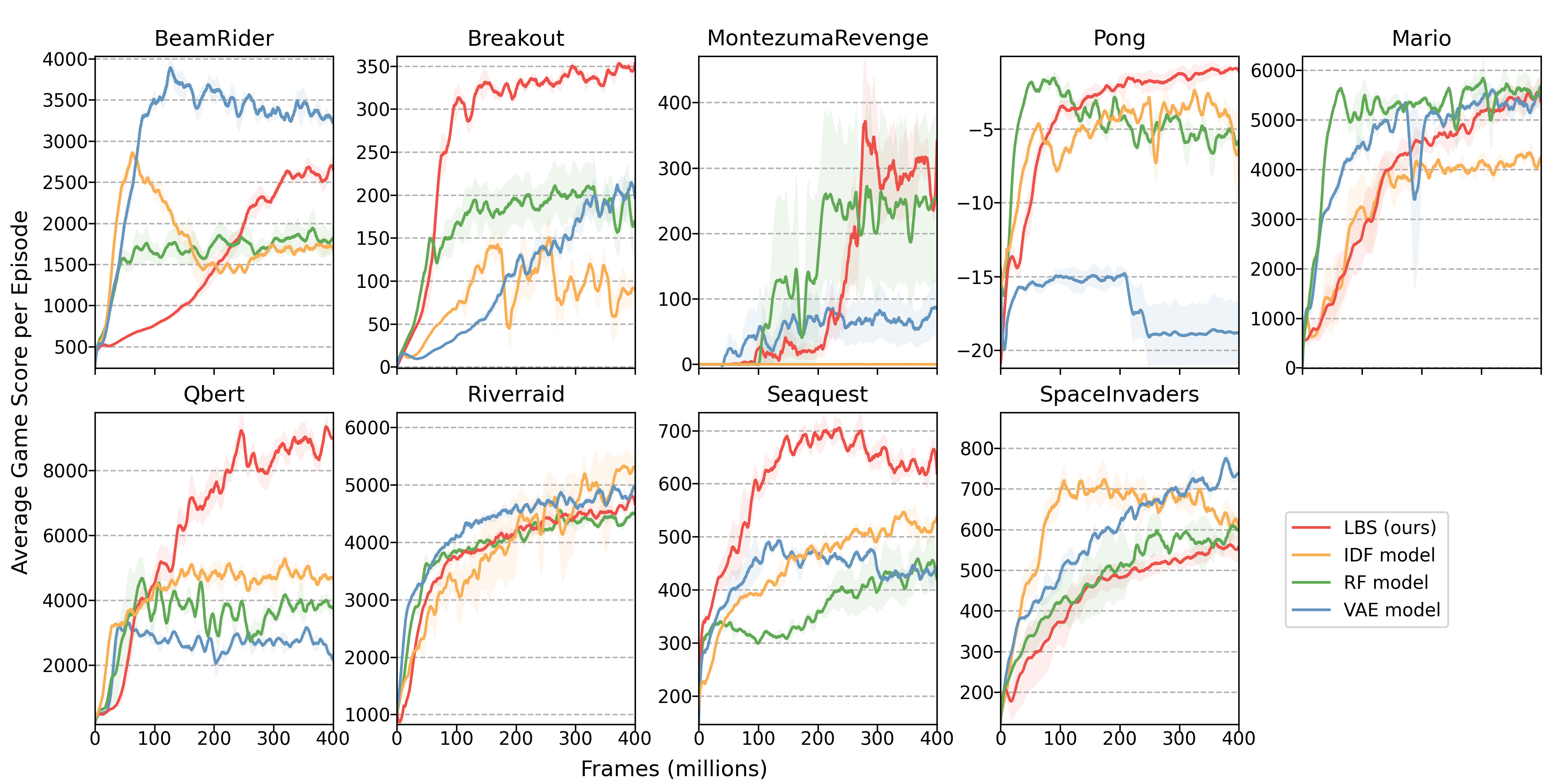

Arcade Games Experiments

The curves show the game score achieved during an episode of training. Agents learn using only the intrinsic motivation signal.

To incentivize comparison against our baseline, we make public the data used in the plots, which can be easily integrated with the original Large-Scale Study of Curiosity-Driven Learning open-source implementation.

They follow videos of the agents playing the games, driven only by their curiosity.